Federated Learning

Contents

Full Title

A means of learning where the nodes can operate independently to create a common understanding of a problem.

Goals

- Accuracy in predicting when a pandemic is likely to occur. But false positives are less problematic than false negatives.

- Universality - in predicting all types of pandemic

- Reproductivity - in the face of growing skepticism in the results of AI.[1]

- Adaptability - in the face of constant advances in knowledge of AI.

Context

- Most human learning is federated in the sense that each human operates as an independent entity which receives inputs and creates outputs.

- In this pattern we model the human tendency to spread processing to each node with a similar hierarchy of capability and sophistication among the nodes.

- This pattern also mimics the Edge Computing pattern of 5G that focuses computing and data gathering to the edge nodes.

Definitions

Federated learning is a machine learning setting where multiple entities (clients) collaborate in solving a machine learning problem, under the coordination of a central server or service provider. Each client's raw data is stored locally and not exchanged or transferred; instead, focused updates intended for immediate aggregation are used to achieve the learning objective. [2]

Decentralized learning might be a better fit definition for this work as the is NO SINGLE CENTRAL AUTHORITY. Rather the Leaf Nodes are structured to accept requests from a variety of sources as well as their own local learning suited to their own local requests. Note that this could also be viewed as learning with more than one federation.

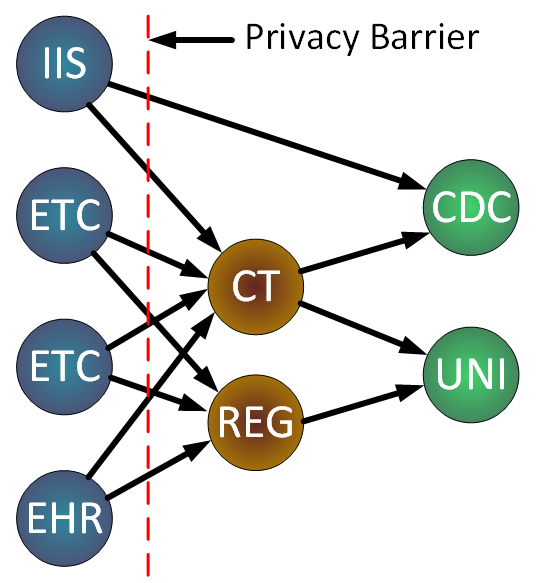

A Hierarchical Directed Graph

One solution is to create a network of all nodes that run any learning algorithm into a multiplicity of trees with paths that always move towards the root of each tree and away from the leaves as well as paths that go from the root out to the leaves to pass new requirement into the leaves.

- IIS = Immunization Information Service

- EHR = Electronic Health record

- CT = Clinical Trial

- REG = Regional network

- CDC = Governmental Agency

- UNI = Research Institution

- ETC = other data sources

Privacy Enhancing

- Three key components of privacy are used in this context:[3]

- User understanding and consent;

- Data Minimization (collect only the data needed for the specific computation); and

- Anonymization (the final released output of the computation does not reveal anything unique to an individual) of released aggregates.

- An of the US and UK governments, Privacy Enhancing Technologies, has one track focused on Transforming Pandemic Response and Forecasting through Federated Learning with End-to-End Privacy.

- To make the graph privacy-enhancing we demand that any personally identifiable information (PII) is restricted to the leaves meaning that it never is processed anywhere but within the medical facility where it is already maintained with full personal data. Only the learning from the data is passed up to the aggregator.

- The question to be determine is where this privacy guarantee will adversely impact the accuracy of the learning process. The test matrix proposed here will address that.

- Another question might be if a small hospital in some impoverished state or country delivered its learning to show particularly poor results. Should we consider that learning to be data leakage on the residents of that hospital? Perhaps those impoverished regions would not want their data to be known outside of the broader learning result?[4]

Key Deliverables

- An interface to the Leaf Nodes that accepts requests for new learning and passes back learning into multiple aggregators.

- A means to motivate the Leaf Nodes to participate in in a variety of research goals.

Intelligent Goals

The objective the effort is a description of the results that can be passed from the Leaf Nodes to the final analysis. Only recently has AI begun to address this as described in the wiki page Intelligent Goals.

Using Test Data

- The synthetic test data will not likely be distributed as it would be in real life.

- The goal is to determine whether selecting data in a manner that mirrors real life and federating populations that mirror real life will be lost to the results with uniform sorting. The following plan is designed to show the impact of distributing data the way that data is likely to be separated in the real world.

- Break the synthetic data into two groups that are randomly selected from the data and getting a result with a single aggregation of those two sets.

- Break the population into 50 groups with random selection.

- Break the population into 50 groups with deliberately selected groups be overrepresented in each group and of widely different numbers of individuals.

- Aggregate each selection into a test learning.

- Measure the discrepancy between the results in each to see impact of real-world distributions on the federated learning results.

Other Work

What existing work has shown is that machine learning has not lived up to expectations, where the expectations where actually not articulated when the projects began. It turns out to be very difficult to get to your goal when you don't know what it is.

- Google's Federated Learning of Cohorts (FLoC) is an algorithm that essentially sorts people into groups of thousands of other people with similar browsing habits. Using machine learning algorithms, a person’s browser puts the user into a group, so all data is kept local to the machine. However, advertisers can still serve personalized ads without issue. The goal here is to keep people in large enough groups that anyone cannot be identified, even with IP associations and other data. Furthermore, as explained on the GitHub page for the project, having a group interest exposed to the web is a significantly better option than what is available now.[5]

- Google kills off FLOC Federated Learning of Cohorts, Google’s controversial project for replacing cookies for interest-based advertising by instead grouping users into groups of users with comparable interests, is dead. In its place, Google today announced a new proposal: Topics. The idea here is that your browser will learn about your interests as you move around the web. It’ll keep data for the last three weeks of your browsing history and as of now, Google is restricting the number of topics to 300, with plans to extend this over time. Google notes that these topics will not include any sensitive categories like gender or race.

References

- ↑ Will Knight, Sloppy Use of Machine Learning Is Causing a ‘Reproducibility Crisis’ in Science Wired 2022-08-10 https://www.wired.com/story/machine-learning-reproducibility-crisis/

- ↑ P. Kairouz, et al., Advances and open problems in federated learning Foundations and Trends in Machine Learning: 14 (1-2); (2021) https://arxiv.org/abs/1912.04977.

- ↑ Kallista Bonawitz +3, (Google) Federated Learning and Privacy ACM Queue (2021-11-16) https://queue.acm.org/detail.cfm?id=3501293

- ↑ Jiale Chen + 4, Beyond Model-Level Membership Privacy Leakage: an Adversarial Approach in Federated Learning IEEE 2020 Int'l conference https://ieeexplore.ieee.org/abstract/document/9209744

- ↑ Nathan Ord, What Is A FloC, And How Will Google Use It to Replace Third-Party Tracking Cookies? Hot Hardware (2021-011-25) https://hothardware.com/news/google-proposes-floc-to-replace-third-party-cookies